So if you are filling an OEDA for Exadata deployment there are few things you should take care of. Most of the screens are self explanatory but there are some bits where one should focus little more. I am running the Aug version of it and the screenshots below are from that version.

- On the Define customer networks screen, the client network is the actual network where your data is going to flow. So typically it is going to be bonded (for high availability) and depending upon the network in your data center you have to select one out of 1/10 G copper and 10 G optical.

- If you are going to use trunk VLANs for your client network, remember to enabled it by clicking the Advanced button and then entering the relevant VLAN id.

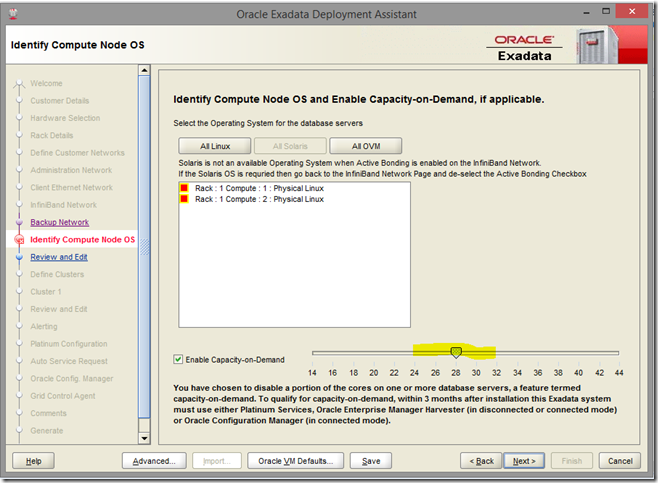

- If all the cores aren’t licensed remember to enable Capacity on Demand (COD) on the Identify Compute node OS screen.

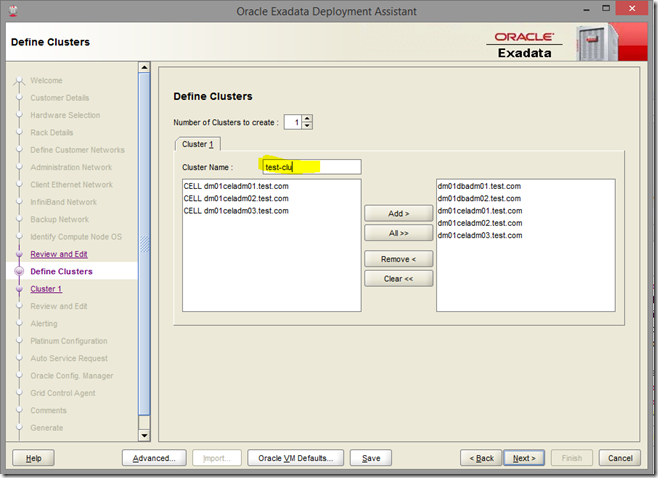

- On the Define clusters screen make sure that you enter a unique (across your environment) cluster name.

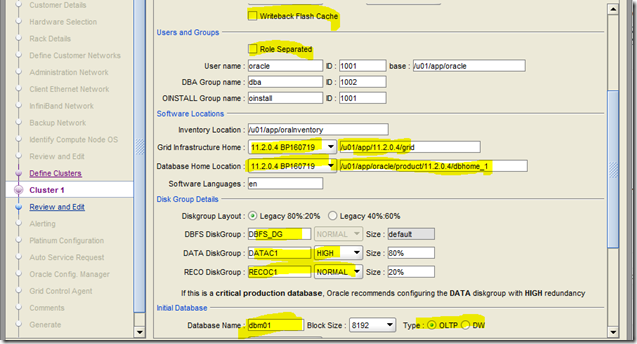

- The cluster details screen captures some of the most important details like

- Whether you want to have flash cache in WriteBack mode instead of WriteThrough

- Whether you want to have a role separated install or want to install both GI and Oracle binaries with oracle user itself.

- GI & Database versions and home for binaries. Always good to leave it at the Oracle recommended values as that makes the future maintenance easy and less painful.

- Disk Group names, redundancy and the space allocation.

- Default database name and type (OLTP or DW).

Also if it is going to be an OVM configuration, you may want to have different VMs in different VLAN segments. It will allow you to change VLAN ids for individual VMs on the respective cluster screens like below

Of course it is important to carefully fill the information in all the screens but the above ones are some of them which should be filled very carefully after capturing the required information from other teams, if needed.